HaCRS Improves Mechanical Phish Bug Finding with Human Assistance

26 Sep 2017Overview

This post describes a system we developed recently to re-introduce humans to automated vulnerability discovery. While human experts can find bugs unreachable to automated bug finding, we were curious whether untrained humans can help automated systems to do better. We found that by integrating human labor with no prior experience in bug finding, otherwise automated systems can overcome some of their shortcomings and find more bugs than they could on their own. We were able to recruit 183 workers through Amazon Mechanical Turk who helped increase program coverage. In effect this lead to a 55% improvement in finding bugs for Cyber Grand Challenge (CGC) binaries. This blog post will discuss key insights and material that did not fit into our forthcoming CCS paper (pdf and bib) "Rise of the HaCRS". The paper was a collaboration between UC Santa Barbara, Arizona State University, and Northeastern University.

Update: additional materials (slides, video) available here.

Introduction

Mechanical Phish is an open source Cyber Reasoning System (CRS) that scored third in last year's CGC event. CGC was a fully automated hacking competition with no human interaction, the first computer vs. computer hacking contest. While this pushed forward automated reasoning, it also highlighted shortcomings in the state of the art of automated bug finding. In this project we enhance fully automated bug finding by adding human assistance to cover areas where human intuition beats computing power.

A shortcoming of fully automated analyses is that tools start without real input and have to explore programs on their own. While lacking intuition, these tools can still fare well, for example AFL can reconstruct JPG file format on it's own, which is impressive. But we were curious whether better input seeds help automated reasoning and found through experimentation that we were able to enhance results significantly. In particular human intuition allows to distinguish states that are logically different, e.g.: winning a game as opposed to losing a game. While automated systems might be able to differentiate, the implications are not clear. Or more generally: semantic hints given by programs go unnoticed by a CRS.

We developed a prototype system which we tested on Amazon Mechanical Turk, evaluating against the CGC sample binary corpus. The results back our suspicion that new inputs can improve CRS findings significantly.

Mechanical Turk

Amazon offers access to human assistants where requesters can offer tasks to be solved for money. This service is often used to gather data where automation is infeasible or results must come from a human (e.g.: surveys). While our system is not designed specifically for Mechanical Turk, we chose the platform due to it's vast access to workers. In HaCRS, a "Tasklet" is a request for human work to solve an issue the CRS can't deal with on it's own. We issue these in steps. E.g., to improve coverage to a specific target, and once that's done we aim higher.

We armed our system with Amazon credits and iteratively let it issue HITs, requesting labor to increase coverage, such that Mechanical Phish can find more bugs. We had the system generally request coverage increases of 10%, and scale the payout based on difficulty. For example while a tasklet we thought of as easy would earn $1, a particularly hard one would be worth $2.5. Performance was measured in triggered program transitions, we provided live feedback as the Turkers were exercising the programs (see screenshots below). We further issued bonus payments based on performance that went further than required, so Turkers would be encouraged to exercise programs further. In total we paid $1,100 in base payment and bonuses to 183 Turkers.

HaCRS User Interface: The Human-Automation Link (HAL)

As we were hoping to enroll large amounts of unskilled labor in our experiments, the UI had to be self-explanatory to scale. Issues with the UI would result in confused emails and result in loss of time on both ends. We tried to fit all information the Turkers could need, and offer all options that could make them work faster.

Mechanical Turk does not allow for Turkers to install software for tasks. This is for good reason as requesters could exploit this to let them install malware or other unwanted software. However, this also presented a challenge for us: our interface needed to be accessible to them while observing this restriction. We decided to build a Web UI for our system, adding a noVNC JavaScript window where we presented the interaction terminal. This choice also lets us be flexible in the future, we can reuse most of the UI while pointing noVNC to other targets.

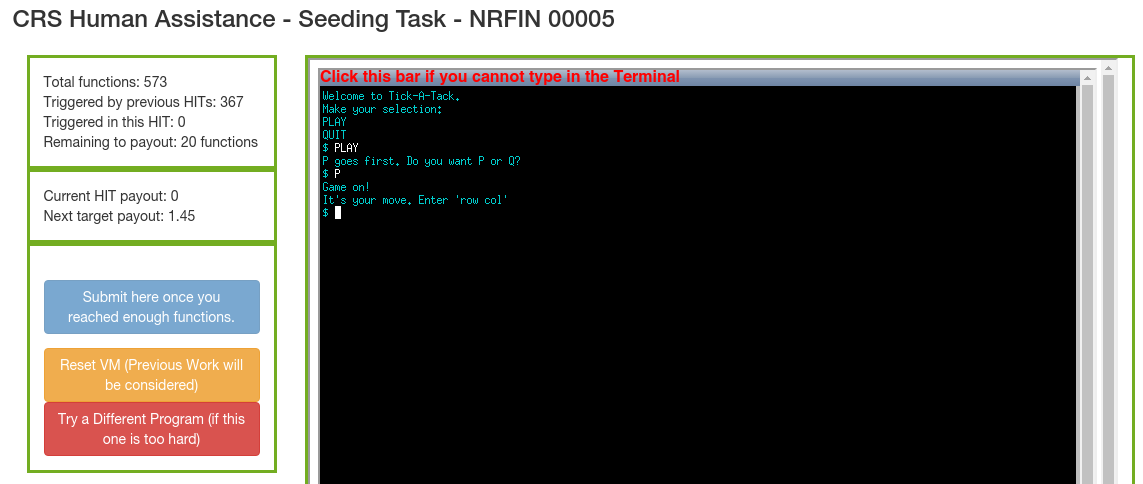

Above we see the HaCRS Human-Automation Link (HAL). Turkers can type in the terminal to interact with the program. To the left is the progress window. We see how many transitions have been triggered and how many more need to be triggered to receive a payout.

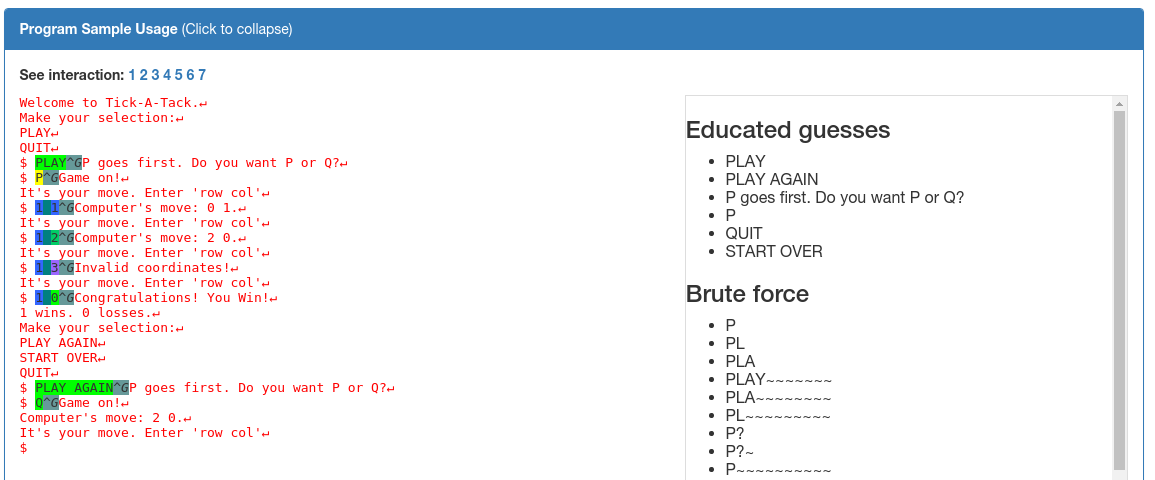

Turkers see previous input / output sequences and can restore these states by clicking on the character in the interaction. All inputs are available to all Turkers. I.e.: if any Turker manages to reach a previously unknown program state, they can pick up from there and explore further without manually repeating all steps. A click will spawn a new docker container in the backend, replay the interaction, and be available to the Turker via noVNC. Note that such replay is only possible for systems where randomness is controlled, this is a general limitation and not specific to HaCRS.

We also offer programmatic input suggestions based on strings that might be encountered later, which the Mechanical Phish otherwise lacks program context to use directly. These strings can function as inspiration to humans to exercise the program better.

Sample program: NRFIN_00005

We will demonstrate HaCRS capabilities based on NRFIN_00005. This application is a game described as "Tic-Tac-Toe, with a few modifications". The player does not see the game board and has to keep track of state on their own. See screenshots above for gameplay and sample inputs. The game has a null pointer dereference bug, which can be triggered after one round has been played and typing "START OVER". Other strings will not trigger the vulnerability.

Driller and AFL (the two main components of Mechanical Phish) were not able to play the game successfully, as they cannot reason about the state of the game. Our Turkers however were able to win the game easily, but typed strings such as "PLAY AGAIN" afterwards, which does not trigger the bug. Next, Mechanical Phish picks up the Turker input and mutates it towards "START OVER", as it recognizes this as a special state, and crashes the program.

Takeaways

Our key takeaways from this project are as follows:

- Input seeds can impact CRS results significantly, and should be used in conjunction with symbolic execution and fuzzing.

- Even unskilled users' intuition can improve CRS results.

- Mechanical Turk turned out to be a good platform for collecting diverse program interactions.

- Semi-experts did not fare significantly better than non-expert users. However, this could be a limitation of our system.

Future Work

For HaCRS, we used humans to increase program coverage to reach states which Mechanical Phish could turn into crashes. However, we envision to involve humans in other areas to enhance CRSs. For example enroll them more directly into exploit generation, or testing patches to verify fixes. These tasks might be less suitable for unskilled labor, and will require more research. Furthermore, finding optimal incentive structures could increase performance of such systems.

Conclusion

We had a total of 183 Turkers work for us at a combined cost of $1,100. These Turkers managed to help Mechanical Phish find 55% more bugs than it could on it's own. HaCRS presents a step towards augmenting traditional CRSs with human intuition where computers are still lacking. Such a combined approach should be further explored to overcome CRS obstacles. Our paper features case studies and implementation details about our system. The full paper is available here: pdf and bib, and will be presented at CCS in Dallas.

If you are interested in doing similar work, do get in touch at mw@ccs.neu.edu and yans@asu.edu.